|

Slurm on Fermilab USQCD Clusters

|

SLURM (Simple Linux Utility For Resource Management) is a very powerful open source, fault-tolerant, and highly scalable resource manager and job scheduling system of high availability currently developed by SchedMD. Initially developed for large Linux Clusters at the Lawrence Livermore National Laboratory, SLURM is used extensively on most Top 500 supercomputers around the globe.

If you have questions about job dispatch priorities on the Fermilab LQCD clusters then please visit this page or send us an email with your question to hpc-admin@fnal.gov.

|

|

Slurm Commands

|

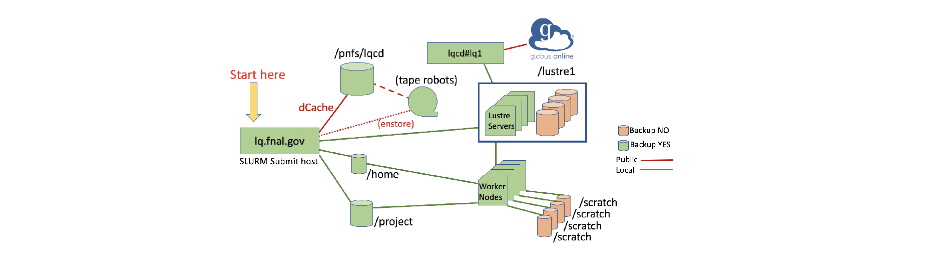

One must log in to the appropriate submit host (see Start Here in the graphics above) in order to run Slurm commands for the appropriate accounts and resources.

- scontrol and squeue: Job control and monitoring.

- sbatch: Batch jobs submission.

- salloc: Interactive job sessions are request.

- srun: Command to launch a job.

- sinfo: Nodes info and cluster status.

- sacct: Job and job steps accounting data.

- Useful environment variables are $SLURM_NODELIST and $SLURM_JOBID.

|

Slurm User Accounts

|

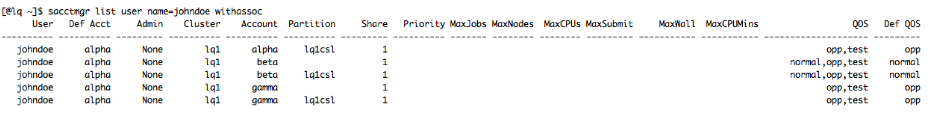

In order to check your "default" SLURM account use the following command:

|

|

To check "all" the SLURM accounts you are associated with use the following command.

|

|

NOTE: If you do not specify an account name during your job submission (using --account), the "default" account will be used to track usage.

|

Slurm Resource Types

|

SLURM Partition (or queue name)

|

Resource Type

|

Description

|

Number of resources

|

Number of tasks per resource

|

GPU resources per node

|

Max nodes per job

|

--partition

|

|

|

--nodes

|

--ntasks-per-node

|

--gres

|

|

lq1csl

|

CPU

|

2.50GHz Intel Xeon Gold 6248 "Cascade Lake", 196GB memory per node (4.9GB/core), EDR Omni-Path

|

183

|

40

|

|

88

|

|

Using SLURM: examples

|

- Submit an interactive job requesting 12 "pi" nodes

-

- [@lattice:~]$ srun --pty --nodes=12 --ntasks-per-node=16 --partition pi bash

- [user@pi111:~]$ env | grep NTASKS

- SLURM_NTASKS_PER_NODE=16

- SLURM_NTASKS=192

- [user@pi111:~]$ exit

-

- Submit an interactive job requesting two "pigpu" nodes (or 4 GPUs/node)

-

- [@lattice:~]$ srun --pty --nodes=2 --partition pigpu --gres=gpu:4 bash

- [@pig607:~]$ PBS_NODEFILE=`generate_pbs_nodefile`

- [@pig607:~]$ rgang --rsh=/usr/bin/rsh $PBS_NODEFILE nvidia-smi -L

- pig607=

- GPU 0: Tesla K40m (UUID: GPU-2fe2a84f-3de9-2ca0-60f0-db011d53a20c)

- GPU 1: Tesla K40m (UUID: GPU-9afce23b-cfdf-2318-ed00-2b23c14337f1)

- GPU 2: Tesla K40m (UUID: GPU-782960ea-d854-e6ee-26ce-363a4c9c01e2)

- GPU 3: Tesla K40m (UUID: GPU-ee804701-10ac-919e-ae64-27888dcb4645)

- pig608=

- GPU 0: Tesla K40m (UUID: GPU-b20a4059-56c2-b36a-ba31-1403fa6de2dc)

- GPU 1: Tesla K40m (UUID: GPU-af290605-caeb-50e8-a4ca-fd533098c302)

- GPU 2: Tesla K40m (UUID: GPU-16ab19e4-9835-5eb2-9b8b-1e479753d20b)

- GPU 3: Tesla K40m (UUID: GPU-2b3d082e-3113-617a-dcc6-26eee33e3b2d)

- [@pig607:~]$exit

-

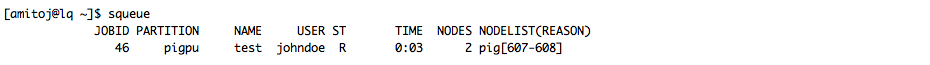

- Submit a batch job requesting 4 GPUs i.e. one "pigpu" nodes

-

- [@lattice ~]$ cat myscript.sh

- #!/bin/sh

- #SBATCH --job-name=test

- #SBATCH --partition=pigpu

- #SBATCH --nodes=1

- #SBATCH --gres=gpu:4

-

- nvidia-smi -L

- sleep 5

- exit

-

- [@lattice ~]$ sbatch myscript.sh

- Submitted batch job 46

|

|

Once the batch job completes the output is available as follows:

- [@lattice ~]$ cat slurm-46.out

- GPU 0: Tesla K40m (UUID: GPU-2fe2a84f-3de9-2ca0-60f0-db011d53a20c)

- GPU 1: Tesla K40m (UUID: GPU-9afce23b-cfdf-2318-ed00-2b23c14337f1)

- GPU 2: Tesla K40m (UUID: GPU-782960ea-d854-e6ee-26ce-363a4c9c01e2)

- GPU 3: Tesla K40m (UUID: GPU-ee804701-10ac-919e-ae64-27888dcb4645)

|

SLURM Reporting

|

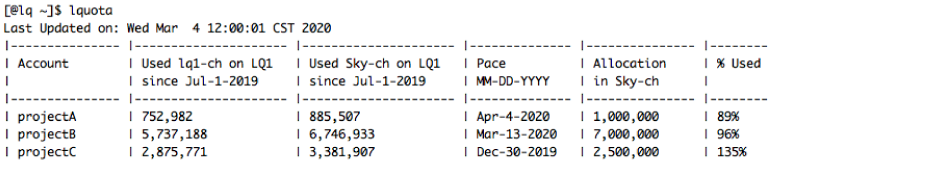

The lquota command run on lq.fnal.gov will provide allocation usage reporting as shown below.

|

|

lq1-ch=lq1-core-hour , Sky-ch=Sky-core-hour ,1 lq1-ch=1.05 Sky-ch

Usage reports are also available on the Allocations page. For questions regarding the reports or should you notice discrepancies in data please email us at lqcd-admin@fnal.gov

|

SLURM Environment variables

|

Variable Name

|

Description

|

Example Value

|

PBS/Torque analog

|

$SLURM_JOB_ID

|

Job ID

|

5741192

|

$PBS_JOBID

|

$SLURM_JOBID

|

Deprecated. Same as SLURM_JOB_ID

|

|

|

$SLURM_JOB_NAME

|

Job Name

|

myjob

|

$PBS_JOBNAME

|

$SLURM_SUBMIT_DIR

|

Submit Directory

|

/project/charmonium

|

$PBS_O_WORKDIR

|

$SLURM_JOB_NODELIST

|

Nodes assigned to job

|

pi1[01-05]

|

cat $PBS_NODEFILE

|

$SLURM_SUBMIT_HOST

|

Host submitted from

|

lattice.fnal.gov

|

$PBS_O_HOST

|

$SLURM_JOB_NUM_NODES

|

Number of nodes allocated to job

|

2

|

$PBS_NUM_NODES

|

$SLURM_CPUS_ON_NODE

|

Number of cores/node

|

8,3

|

$PBS_NUM_PPN

|

$SLURM_NTASKS

|

Total number of cores for job

|

11

|

$PBS_NP

|

$SLURM_NODEID

|

Index to node running on relative to nodes assigned to job

|

0

|

$PBS_O_NODENUM

|

$PBS_O_VNODENUM

|

Index to core running on within node

|

4

|

$SLURM_LOCALID

|

$SLURM_PROCID

|

Index to task relative to job

|

0

|

$PBS_O_TASKNUM - 1

|

$SLURM_ARRAY_TASK_ID

|

Job Array Index

|

0

|

$PBS_ARRAYID

|

|

Binding and Distribution of tasks

|

- There's a good description of MPI process affinity binding and srun here: Click here

-

- Reasonable affinity choices by partition types on the Fermilab LQCD clusters are:

-

- Intel (lq1) --distribution=cyclic:cyclic --cpu_bind=sockets --mem_bind=no

|

Launching MPI processes

|

Please refer to the following page for recommended MPI launch options.

|

Additional useful information

|

|

|

- Fermi National Accelerator Laboratory

- Managed by Fermi Research Alliance, LLC

- for the U.S. Department of Energy Office of Science

|

|

- Security, Privacy, Legal

|

|

|

|